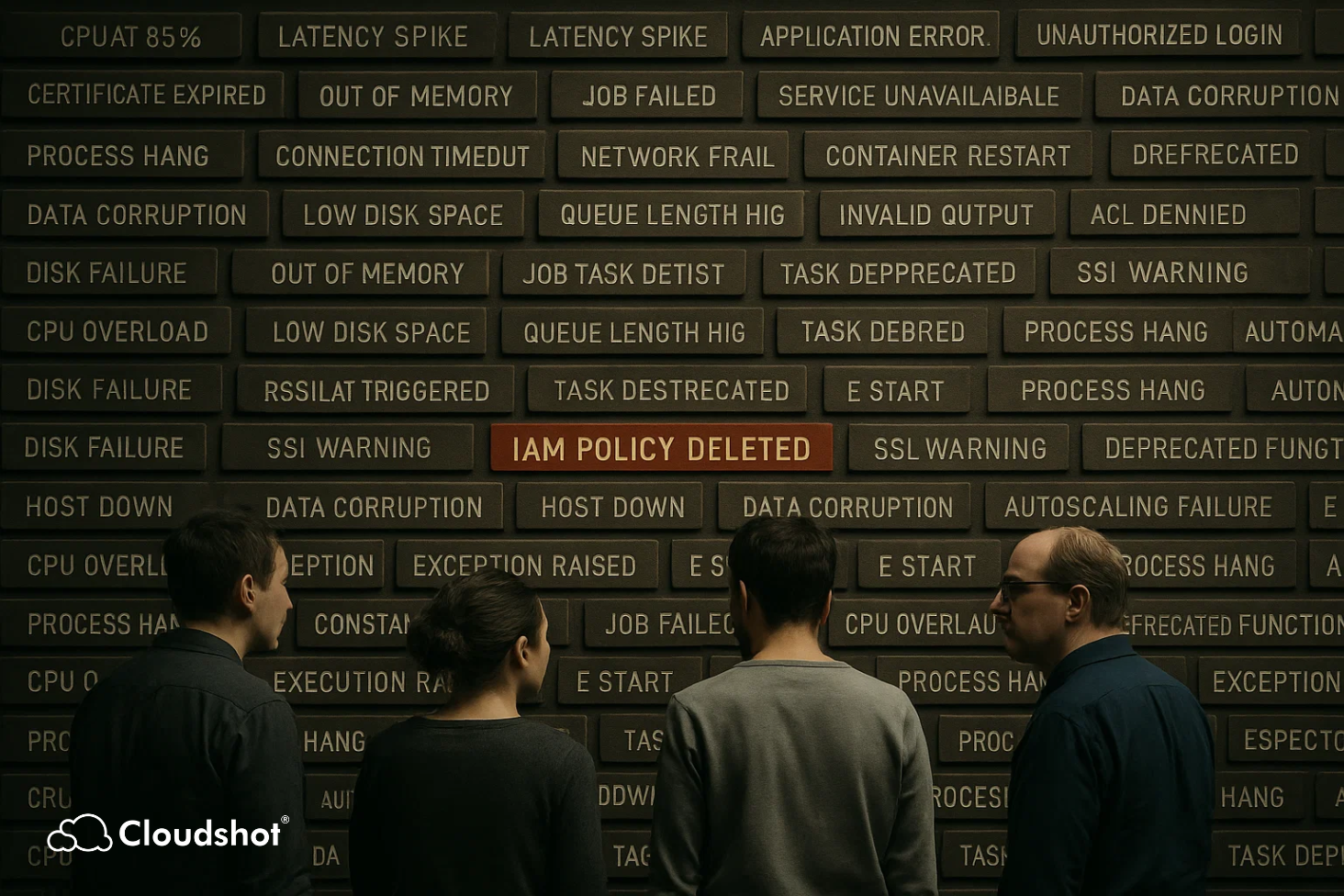

They were getting alerts—plenty of them.

Disk thresholds. CPU spikes. Latency dips. Every five minutes, something pinged. But when the real outage hit, no one noticed. The damage was done before anyone logged in.

This is the DevOps paradox of 2025: We have more alerts than ever—but less trust in them.

Across SaaS teams, alert fatigue is quietly killing cloud response velocity. It's not because engineers are lazy. It's because the tools are loud, fragmented, and context-blind.

Let's break it down.

Too Many Alerts, Too Little Signal

Modern cloud environments—especially across AWS, Azure, and GCP—generate a relentless stream of metrics. Every dip in performance, every blip in latency, every resource threshold crossed can become an alert.

But what starts as vigilance quickly turns into chaos.

Teams learn to tune things out. Slack channels flood. PagerDuty keeps buzzing. Grafana lights up. And no one's sure anymore which of those 900 alerts actually matters.

The result? When a real problem hits, like an IAM misconfiguration or a silent drift in S3 policy, it's buried in noise. By the time someone pieces it together, trust is broken and customers are already impacted.

Why Dev Teams Lose Faith in Alerts

Over-alerting builds numbness

When everything's "critical," the team tunes out. Engineers shouldn't have to mentally filter which alerts to trust.

There's no context for urgency

An alert saying "latency up 5%" means nothing unless you know it's affecting a production endpoint or a compliance-critical workload.

Investigation becomes painful

DevOps tools often work in silos—logs, metrics, dashboards—all disconnected. It's slow, confusing, and high-stress.

Cloudshot: Designed to Restore Trust in Alerts

Cloudshot doesn't just monitor. It maps, prioritizes, and guides.

Instead of bombarding teams with dozens of unranked alerts, Cloudshot filters events through a real-time topology of your multi-cloud architecture.

You only get notified when downstream services or critical nodes are truly impacted

You can see the blast radius—visually, instantly

You know what changed, where it started, and what's at risk

This context is the difference between guessing and knowing.

With Cloudshot, incident response isn't a fire drill. It's a controlled, confident maneuver.

Results That Speak for Themselves

Teams using Cloudshot report:

One DevOps lead said it best: "Now we only get alerts when something actually breaks. It's changed how our team breathes."

It's Not More Alerts. It's Better Alerts.

If your DevOps team is drowning in noise, you don't need a new notification system.

You need clarity.